Accepted to Interspeech 2022

Authors Byeongseon Park Ryuichi Yamamoto Kentaro Tachibana Abstract We propose a unified accent estimation method for Japanese text-to-speech (TTS).

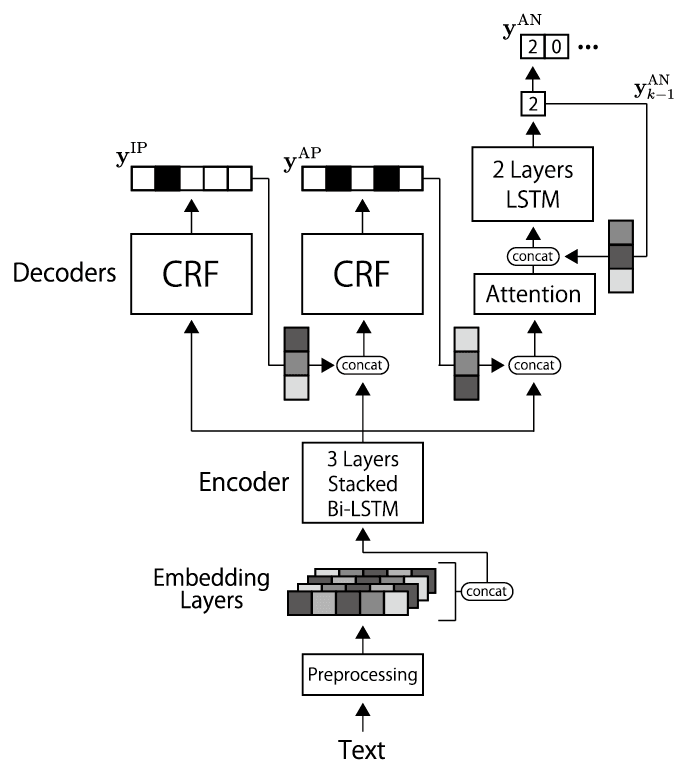

Unlike the conventional two-stage methods, which separately train two models for predicting accent phrase boundaries and accent nucleus positions, our method merges the two models and jointly optimizes the entire model in a multi-task learning framework.

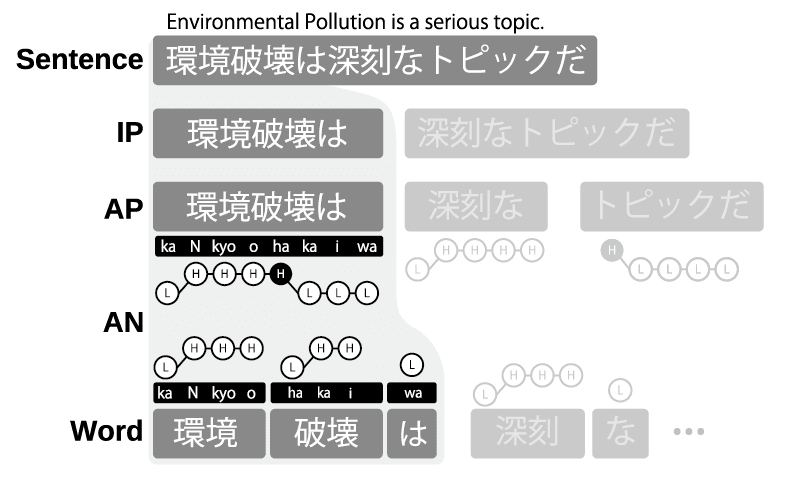

Furthermore, considering the hierarchical linguistic structure of intonation phrases (IPs), accent phrases, and accent nuclei, we generalize the proposed approach to simultaneously model the IP boundaries with accent information.

Objective evaluation results reveal that the proposed method achieves an accent estimation accuracy of 80.4%, which is 6.67% higher than the conventional two-stage method.

When the proposed method is incorporated into a neural TTS framework, the system achieves a 4.29 mean opinion score with respect to prosody naturalness.

Demo TTS setup Acoustic model : FastSpeech2 [1 ]Vocoder : Parallel WaveGAN [2 ]The detailed model structure and training conditions of these two models were the same as those in [3 ].

Target tasks

IPs : Intonation phrasesAPs : Accent phrasesANs : Accent nucleiSystems used for comparision Models Model Encoder Decoder Task (a)[4 ] - CRF AP (b)[4 ] - CRF AN (c) Bi-LSTM CRF IP (d) Bi-LSTM CRF AP (e) Bi-LSTM AR AN (f) Bi-LSTM CRF, AR AP+AN (g) Bi-LSTM CRF, CRF, AR IP+AP+AN

Systems System Components Reference (Recording) - Reference (TTS) - (A) (a), (b), (c) (B) (c), (d), (e) (C) (c), (f) (D) (g)

Audio samples (Japanese) Tags for simplified output + accent with high pitch - accent with low pitch / accent phrase boundary # intonation phrase boundary (blue tag) is correct (e.g., + : correct accent) (red tag) is incorrect (e.g., + : incorrect accent) _ phrase boundary missing

Sample 1: "日大アメフト反則問題。" Reference(TTS) ni- chi+ da+ i+ / a- me+ fu+ to+ / ha- N+ so+ ku+ mo+ N- da- i- . System(A) ni- chi+ da+ i+ _ a+ me- fu- to- / ha- N+ so+ ku+ mo+ N- da- i- . System(B) ni- chi+ da+ i+ _ a- me- fu- to- / ha- N+ so+ ku+ mo+ N+ da+ i+ . System(C) ni- chi+ da+ i+ / a- me+ fu+ to+ / ha- N+ so+ ku+ mo+ N- da- i- . System(D) ni- chi+ da+ i+ / a- me+ fu+ to+ / ha- N+ so+ ku+ mo+ N- da- i- .

Sample 2: "あなたとおはなししている時以外のスケジュールは、内緒ですよ。" Reference(Recording) - Reference(TTS) a- na+ ta- to- / o- ha+ na+ shi+ shi+ te+ i+ ru+ / to- ki+ i+ ga- i- no- / su- ke+ jyuu+ ru- wa- # na- i+ shyo+ de+ su- yo- . System(A) a- na+ ta- to- / o- ha+ na+ shi+ shi+ te+ i+ ru+ / to+ ki- / i+ ga- i- no- / su- ke+ jyuu+ ru- wa- # na- i+ shyo+ de+ su- yo- . System(B) a- na+ ta- to- / o- ha+ na+ shi+ shi+ te+ i+ ru+ / to+ ki- i- ga- i- no- / su+ ke- jyuu- ru- wa- # na- i+ shyo+ de- su- yo- . System(C) a- na+ ta- to- / o- ha+ na+ shi+ shi+ te+ i+ ru+ / to+ ki- / i+ ga- i- no- / su- ke+ jyuu+ ru- wa- # na- i+ shyo+ de+ su+ yo+ . System(D) a- na+ ta- to- / o- ha+ na+ shi+ shi+ te+ i+ ru+ / to+ ki- / i+ ga- i- no- / su- ke+ jyuu+ ru- wa- # na- i+ shyo+ de+ su+ yo+ .

Sample 3: "後あとトラブルになる可能性が高いですよ。" Reference(Recording) - Reference(TTS) a- to+ a+ to+ / to- ra+ bu- ru- ni- / na+ ru- / ka- noo+ see+ ga+ / ta- ka+ i- de- su- yo- . System(A) a- to+ a+ to- / to- ra+ bu- ru- ni- / na+ ru- / ka- noo+ see+ ga+ / ta- ka+ i- de- su- yo- . System(B) a+ to- a- to- _ to- ra- bu- ru- ni- / na+ ru- / ka- noo+ see+ ga+ / ta- ka+ i- de- su- yo- . System(C) a+ to- a- to- / to- ra+ bu- ru- ni- / na+ ru- / ka- noo+ see+ ga+ / ta- ka+ i- de- su- yo- . System(D) a+ to- a- to- / to- ra+ bu- ru- ni- / na+ ru- / ka- noo+ see+ ga+ / ta- ka+ i- de- su- yo- .

Sample 4: "「今世紀に入ってから1番楽しみな食事会」だと期待を寄せた。" Reference(Recording) - Reference(TTS) ko- N+ see+ ki- ni- / ha+ i- Q- te- ka- ra- # i- chi+ ba+ N+ / ta- no+ shi+ mi- na- / shyo- ku+ ji+ ka+ i+ da+ to- # ki- ta+ i+ o+ / yo- se+ ta+ . System(A) ko- N+ see+ ki- ni- / ha+ i- Q- te- ka- ra- # i- chi+ ba+ N+ / ta- no+ shi+ mi- na- / shyo- ku+ ji+ ka+ i- da- to- / ki- ta+ i+ o+ / yo- se+ ta+ . System(B) ko+ N- see- ki- ni- / ha+ i- Q- te- ka- ra- # i+ chi- ba- N- / ta- no+ shi+ mi+ na+ / shyo- ku+ ji+ ka- i- da- to- / ki- ta+ i+ o- / yo- se+ ta+ . System(C) ko- N+ see+ ki- ni- / ha+ i- Q- te- ka- ra- # i- chi+ ba+ N+ / ta- no+ shi+ mi- na- / shyo- ku+ ji+ ka- i- da- to- / ki- ta+ i+ o+ / yo- se+ ta+ . System(D) ko+ N- / see+ ki- ni- / ha+ i- Q- te- ka- ra- # i- chi+ ba+ N+ / ta- no+ shi+ mi- na- / shyo- ku+ ji+ ka- i- da- to- # ki- ta+ i+ o+ / yo- se+ ta+ .

Sample 5: "23日に心臓発作で倒れ、ロス市内の病院に入院していた女優のキャリー・フィッシャーさんが現地時間の27日朝、60歳で死去。" Reference(Recording) - Reference(TTS) ni+ jyuu- / sa+ N- ni- chi- ni- # shi- N+ zoo+ ho+ Q- sa- de- / ta- o+ re- # ro- su+ shi+ na- i- no- / byoo- i+ N+ ni+ / nyuu- i+ N+ shi+ te+ i+ ta+ # jyo- yuu+ no+ / kya- rii+ fi+ Q- shyaa- sa- N- ga- # ge- N+ chi+ ji+ ka- N- no- / ni+ jyuu- / shi- chi+ ni+ chi+ / a+ sa- # ro- ku+ jyu+ Q- sa- i- de- / shi+ kyo- . System(A) ni+ jyuu- / sa+ N- ni- chi- ni- # shi- N+ zoo+ ho+ Q- sa- de- / ta- o+ re- # ro- su+ shi+ na- i- no- / byoo- i+ N+ ni+ / nyuu- i+ N+ shi+ te+ i+ ta+ # jyo- yuu+ no+ # kya- rii+ fi+ Q- shyaa- sa- N- ga- # ge- N+ chi+ ji+ ka- N- no- / ni+ jyuu- / shi- chi+ ni+ chi+ / a+ sa- # ro- ku+ jyu+ Q- sa- i- de- / shi+ kyo- . System(B) ni+ jyuu- / sa+ N- ni- chi- ni- # shi- N+ zoo+ ho+ Q- sa- de- / ta- o+ re- # ro- su+ shi+ na- i- no- / byoo- i+ N+ ni+ / nyuu- i+ N+ shi+ te+ i+ ta+ # jyo- yuu+ no+ # kya- rii+ fi+ Q- shyaa- sa- N- ga- # ge+ N- chi- ji- ka- N- no- / ni- jyuu+ / shi+ chi- ni- chi- / a- sa+ # ro+ ku- jyu- Q- sa- i- de- / shi- kyo+ . System(C) ni+ jyuu- / sa+ N- ni- chi- ni- # shi- N+ zoo+ ho+ Q- sa- de- / ta- o+ re- # ro- su+ shi+ na- i- no- / byoo- i+ N+ ni+ / nyuu- i+ N+ shi+ te+ i+ ta+ # jyo- yuu+ no+ # kya- rii+ fi+ Q- shyaa- sa- N- ga- # ge- N+ chi+ ji+ ka- N- no- / ni+ jyuu- / shi- chi+ ni+ chi+ / a+ sa- # ro- ku+ jyu+ Q- sa- i- de- / shi+ kyo- . System(D) ni+ jyuu- / sa+ N- ni- chi- ni- # shi- N+ zoo+ ho+ Q- sa- de- / ta- o+ re- # ro- su+ shi+ na- i- no- / byoo- i+ N+ ni+ / nyuu- i+ N+ shi+ te+ i+ ta+ # jyo- yuu+ no+ # kya- rii+ fi+ Q- shyaa- sa- N- ga- # ge- N+ chi+ ji+ ka- N- no- / ni+ jyuu- / shi- chi+ ni+ chi+ / a+ sa- # ro- ku+ jyu+ Q- sa- i- de- / shi+ kyo- .

States used for comparision Recording : Recorded speech.X : The speech synthesised using wrong IP , AP , and AN information.IP : The speech synthesised using correct IP information, with wrong AP and AN .AP : The speech synthesised using correct AP information, with wrong IP and AN .AN : The speech synthesised using correct AN information, with wrong IP and AP .IP+AP : The speech synthesised using correct IP and AP information, with wrong AN .IP+AN : The speech synthesised using correct IP and AN information, with wrong AP .AP+AN : The speech synthesised using correct AP and AN information, with wrong IP .IP+AP+AN : The speech synthesised using correct IP , AP , and AN information.Audio samples (Japanese) Sample 1: "最初にすることは早起き、次にするのが二度寝、その次が後悔。" Recording - X sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- / ni+ do- / ne- # so- no+ / tsu- gi+ ga- / koo+ ka- i- . IP sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- # ni+ do- / ne- # so- no+ / tsu- gi+ ga- # koo+ ka- i- . AP sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- / ni+ do- ne- # so- no+ / tsu- gi+ ga- / koo+ ka- i- . AN sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- / ni- do+ / ne+ # so- no+ / tsu- gi+ ga- / koo+ ka- i- . IP+AP sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- # ni+ do- ne- # so- no+ / tsu- gi+ ga- # koo+ ka- i- . IP+AN sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- # ni- do+ / ne+ # so- no+ / tsu- gi+ ga- # koo+ ka- i- . AP+AN sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- / ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- / ni- do+ ne+ # so- no+ / tsu- gi+ ga- / koo+ ka- i- . IP+AP+AN sa- i+ shyo+ ni+ / su- ru+ / ko- to+ wa- # ha- ya+ o- ki- # tsu- gi+ ni- / su- ru+ no- ga- # ni- do+ ne+ # so- no+ / tsu- gi+ ga- # koo+ ka- i- .

Sample 2: "メキシコ留学中の、エーケービーフォーティーエイト入山杏奈が一時帰国。" Recording - X me- ki+ shi+ ko+ _ ryuu+ ga+ ku+ chyuu+ no+ # ee- kee+ bii+ _ foo- tii- e- i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- # i- chi+ ji- / ki- ko+ ku+ . IP me- ki+ shi+ ko+ _ ryuu+ ga+ ku+ chyuu+ no+ # ee- kee+ bii+ _ foo- tii- e- i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- / i- chi+ ji- / ki- ko+ ku+ . AP me- ki+ shi+ ko+ / ryuu+ ga- ku- chyuu- no- # ee- kee+ bii+ / foo+ tii- e- i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- # i- chi+ ji- / ki- ko+ ku+ . AN me- ki+ shi+ ko+ _ ryuu- ga- ku- chyuu- no- # ee- kee+ bii+ _ foo+ tii+ e+ i+ to+ # i- ri+ ya+ ma+ / a+ N- na- ga- # i- chi+ ji- / ki- ko+ ku+ . IP+AP me- ki+ shi+ ko+ / ryuu+ ga- ku- chyuu- no- # ee- kee+ bii+ / foo+ tii- e- i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- / i- chi+ ji- / ki- ko+ ku+ . IP+AN me- ki+ shi+ ko+ _ ryuu- ga- ku- chyuu- no- # ee- kee+ bii+ _ foo+ tii+ e+ i+ to+ # i- ri+ ya+ ma+ / a+ N- na- ga- / i- chi+ ji- / ki- ko+ ku+ . AP+AN me- ki+ shi+ ko+ / ryuu- ga+ ku+ chyuu+ no+ # ee- kee+ bii+ / foo- tii+ e+ i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- # i- chi+ ji- / ki- ko+ ku+ . IP+AP+AN me- ki+ shi+ ko+ / ryuu- ga+ ku+ chyuu+ no+ # ee- kee+ bii+ / foo- tii+ e+ i- to- # i- ri+ ya+ ma+ / a+ N- na- ga- / i- chi+ ji- / ki- ko+ ku+ .

Sample 3: "私なんて、若い頃の貯金食いつぶし生活で、40代になってからはあんまり働いてないですもん。" Recording - X wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ # ku- i+ tsu+ bu+ shi- / see- ka+ tsu+ de+ # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i+ de+ su+ mo+ N+ . IP wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ / ku- i+ tsu+ bu+ shi- / see- ka+ tsu+ de+ # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i+ de+ su+ mo+ N+ . AP wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ # ku- i+ tsu+ bu+ shi- see- ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i+ de+ su+ mo+ N+ . AN wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ # ku- i+ tsu+ bu+ shi+ / see+ ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i- de- su- mo- N- . IP+AP wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ / ku- i+ tsu+ bu+ shi- see- ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i+ de+ su+ mo+ N+ . IP+AN wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ / ku- i+ tsu+ bu+ shi+ / see+ ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i- de- su- mo- N- . AP+AN wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ # ku- i+ tsu+ bu+ shi+ see+ ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i- de- su- mo- N- . IP+AP+AN wa- ta+ shi+ na+ N- te- # wa- ka+ i- / ko+ ro- no- / chyo- ki+ N+ / ku- i+ tsu+ bu+ shi+ see+ ka- tsu- de- # yo- N+ jyuu+ da- i- ni- / na+ Q- te- ka- ra- wa- # a- N+ ma+ ri+ / ha- ta+ ra+ i+ te+ na+ i- de- su- mo- N- .

References [1]: Y. Ren, C. Hu, X. Tan, S. Zhao, Z. Zhao, and T.Y. Liu, “FastSpeech 2: Fast and high-quality end-to-end text-to-speech,” in Proc. ICLR, 2021 (arXiv ). [2]: R. Yamamoto, E. Song, and J.-M. Kim, “Parallel WaveGAN: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram,” in Proc. ICASSP, 2020, pp. 6199–6203 (arXiv ). [3]: R. Yamamoto, E. Song, M.-J. Hwang, and J.-M. Kim, “Parallel waveform synthesis based on generative adversarial networks with voicing-aware conditional discriminators,” in Proc. ICASSP, 2021, pp. 6039–6043 (arXiv ). [4]: M. Suzuki, R. Kuroiwa, K. Innami, S. Kobayashi, S. Shimizu, N. Minematsu, and K. Hirose, “Accent sandhi estimation of Tokyo dialect of Japanese using conditional random fields,” IEICE Trans., vol. E100-D, no. 4, pp. 655–661, 2017 (IEICE ). Acknowledgements This work was supported by Clova Voice, NAVER Corp., Seongnam, Korea.

The authors would like to thank Yuma Shirahata and Kosuke Futamata at LINE Corp., Tokyo, Japan, for their support.